When developing data pipelines, we put together a series of processing steps, lookups, computations, etc. in order to re-shape source data into a target form.

The development cycle looks something like this:

- add transformation step/calculation

- run against incoming data

- verify intended effect

- move on to next transformation task

- repeat

If we’re coding pipelines, the simplest way of verifying the effect of our transformations is to put logging statements at the appropriate places, and run the pipeline.

If we’re using a graphical tool, it is likely allowing for test runs. We can see the output of individual steps, and can confirm that our design works as expected.

Both of these approaches have a shortcoming: they run the entire pipeline. This comes with the cognitive overhead of running the whole pipeline and getting back to the place we’re presently working on. Also, the pipeline may have to do some heavy lifting before any data even arrives at the spot we’re working on — for example when we’re working after a sort or aggregation step that needs to see all data before forwarding anything. This means that we’re working with delayed feedback, and every single change we’re making takes longer and longer to verify.

To some extent we can work around these limitations by breaking up the data pipelines into smaller pieces. But we’re then burdened with preparing adequate test data for each individual sub-pipeline. We can’t tap into the source data directly, as this would only fit our very first sub-pipeline, and the output of that is what feeds the others.

Tweakstreet gives you immediate feedback

We designed Tweakstreet to allow for a much shorter feedback cycle when working on transformations. There is no need to re-run the whole pipeline all the time. In fact, you can get immediate feedback on most calculations.

An example task

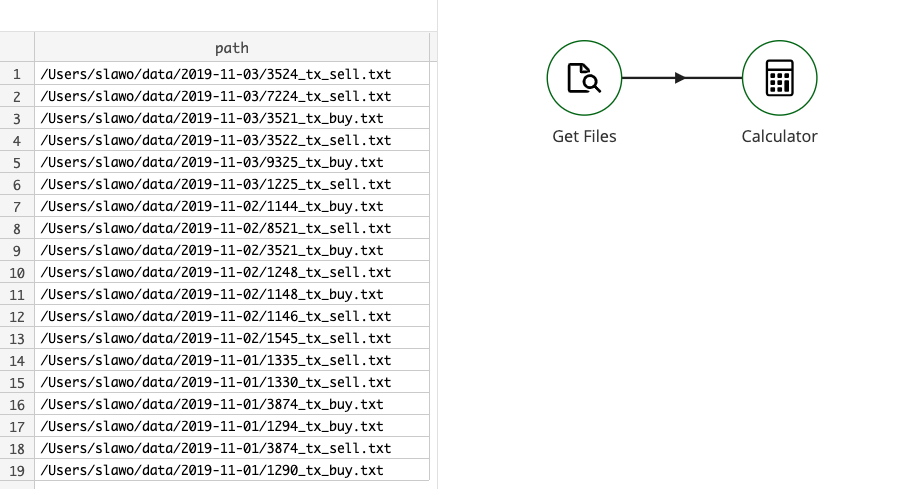

Let’s look at an example that is fairly common in batch data processing. The pipeline is supposed to read a set of CSV data files which are stored in the following file system layout:

A whole data set following this pattern:

Given one data file path the requirements are:

- extract the date

- extract customer number

- extract transaction type

Our pipeline would hand off that path and extracted meta data to some sub-pipeline responsible for loading file contents.

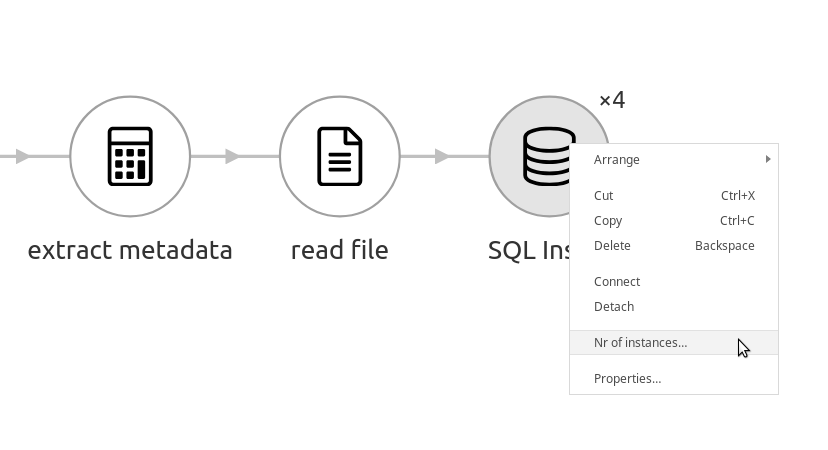

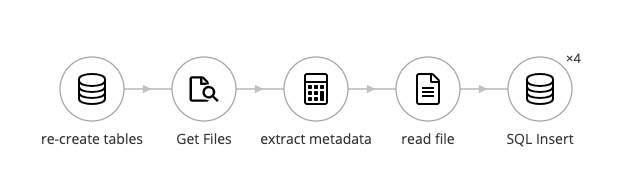

You could start by discovering the files to be loaded using the Get Files step, and passing the paths to a subsequent calculator step for meta data extraction. Like so:

For each input row, the calculator can now execute meta data extraction. The current path is available in scope as in.path.

The inline-calculation approach

There are several good ways to extract and validate the data. I’ll go with splitting the path on /, and then further extracting information from each part.

Tweakstreet’s ability to evaluate expressions inside step configuration allows you to develop computation expressions inline, getting results immediately. Once you’re satisfied with your computations, you switch from test data to input data and go back to run-and-view to assess the big picture.

The following clip shows the entire development cycle for the required data extraction, verifying each step along the way.