In this article, I am going to demonstrate how to load JSON data into a relational schema. I’ll be using random user data, as generated by the https://randomuser.me/ public API. The generated user data is loaded into a Postgres database to create a test dataset of 1000 users.

I will be using the Tweakstreet data processing tool (preview build 27) for this task. The preview builds are free. The GA version will remain free for personal and evaluation use.

The final data flow performing the load is available for download. If you’d like to run it yourself, unzip the download, and open the loading.dfl file with Tweakstreet. Make sure to drop the Postgres JDBC driver into your $HOME/.tweakstreet/drivers folder as described in the configuration documentation. Change the database connection definition to match your test database. You can access database connections by selecting the menu option: Flow → Properties…

Input format and output schema

The randomuser.me API generates user information in the following JSON format:

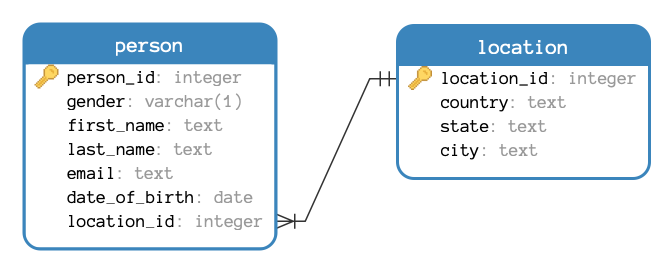

I’d like to extract a subset of the fields into a small relational schema:

Tweaking the request

Since the API is public, and requires only a single GET request, you can simply use your browser to see the API results. The API documentation gives us some options to tweak the request:

The basic URL is: https://randomuser.me/api/

Since I’d like the results to be stable, I’d like to fix the API version, which leads to: https://randomuser.me/api/1.3/

Depending on your browser you may see unformatted JSON output. Let’s make sure it’s human-readable by adding a corresponding parameter: https://randomuser.me/api/1.3/?format=pretty

Next, I’d like to optimize response time by requesting only fields we need: https://randomuser.me/api/1.3/?format=pretty&inc=gender,name,email,dob,location

We want consistent outcomes during development. If something goes wrong for a particular record, we’d like to repeat the request and get the same results back so we can debug the issue. This is done using a seed parameter. Same seed value generates same results: https://randomuser.me/api/1.3/?format=pretty&inc=gender,name,email,dob,location&seed=myseed

Finally, we need more than one result. We can start with 10 results for the development cycle, then turn it up to 1000 once we’re satisfied with the import process: https://randomuser.me/api/1.3/?format=pretty&inc=gender,name,email,dob,location&seed=myseed&results=10

Now our JSON response looks like this:

Import strategy

The import process consists the following major sections:

- make HTTP request to retrieve the data

- generate individual row per user in API response

- extract relevant person and location fields from user record

- ensure location database record exists, reuse location ids if possible

- write person record

The data flow

The HTTP Request

The data flow starts with the HTTP Request step retrieving the server response. Attaching a dummy step makes it possible to track the data passing between steps. In our case it will be a single row with the response string from the HTTP request:

Itemizing users

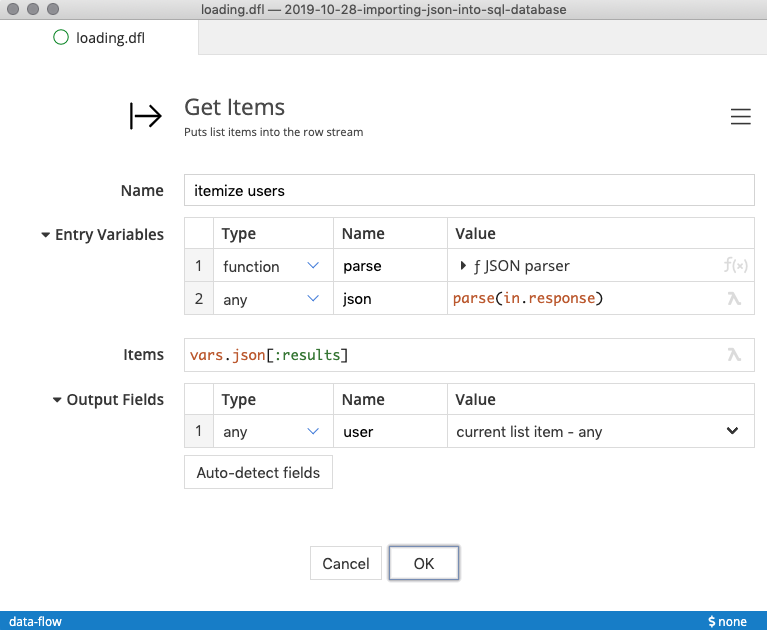

We want to do two things with the response:

- Parse it as JSON, to make the data navigable

- Generate a row for every item in the

response["results"]array

The Get Items step iterates over a list of items, generating a row for each. The parsing of the data is using the built-in JSON parser function in the entry variables section of the step.

Extracting fields

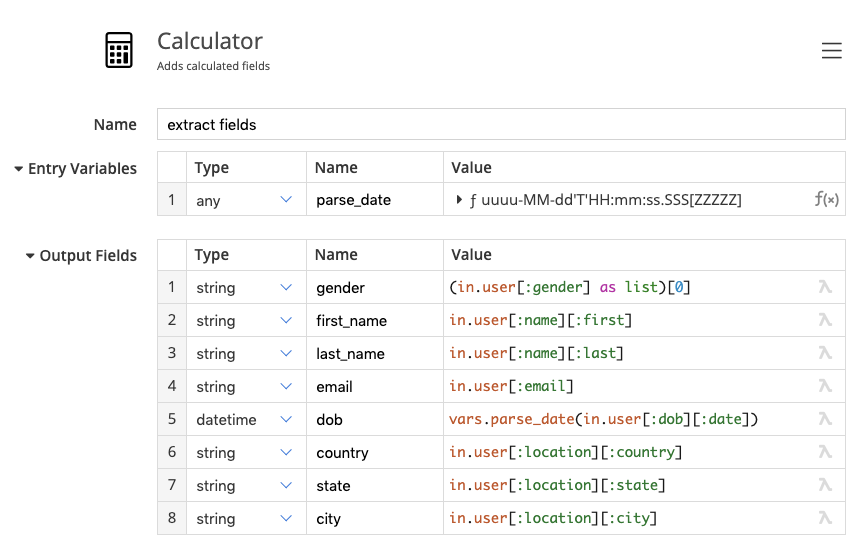

The next step is to extract the desired field values for more convenient preview and assignment to database fields. The calculator step is suitable for this, it performs arbitrary calculations for each passing row. In our case, it extracts the desired field values to the top for convenient preview using some formula expressions:

The built-in data parsing function is used with a standard formatting string to convert the date of birth from a string to an actual datetime value. The one-character gender marker is extracted from the first character of the gender string, so in our API it will be always be m or f

When running the flow, the data tracking shows the extracted fields:

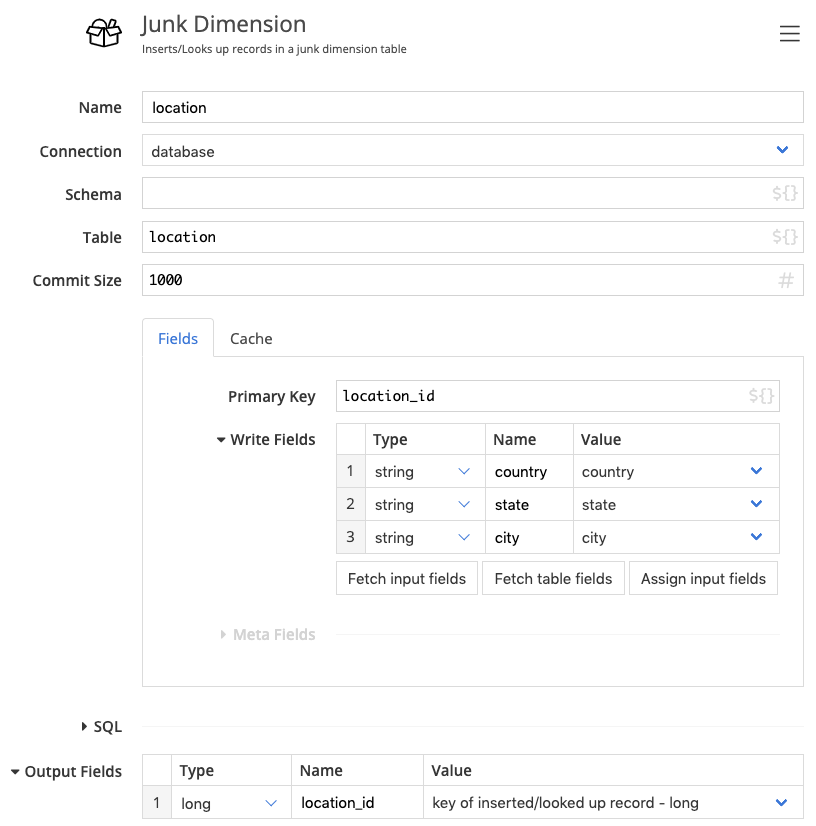

Populating the location table

We want to ensure there is a combination of the country , state , and city fields in our location table, and retrieve that location’s id to place it in the person record. The Junk Dimension step performs this exact functionality:

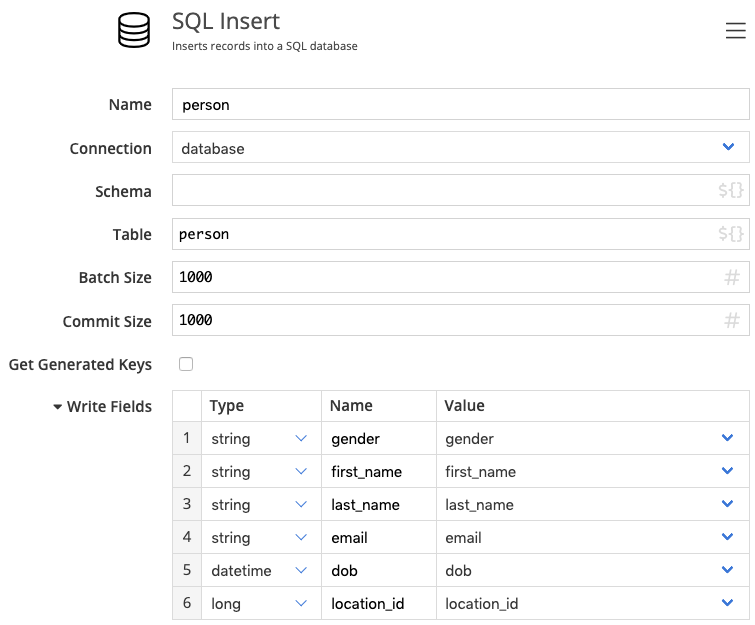

Writing person records

The SQL Insert step inserts a record for each passing row. We’ll just configure the fields we need:

Creating the tables

The location and person tables must be created before the data flow can populate them. The Junk Dimension and SQL Insert step each can generate the SQL for table and index creation, but as a design-principle they do not execute it autonomously. Table and Index creation are always explicit in Tweakstreet. We can copy the statements the steps generate into a SQL Script step and put it in front of the entire flow.

For Postgres 11 the following DDL does the job:

Running end-to-end

We’re now ready to run a complete cycle, which will re-create and re-populate our table. It is time to change the results parameter on the request URL to load 1000 records, and also potentially remove the seed parameter to trigger different results on each load:

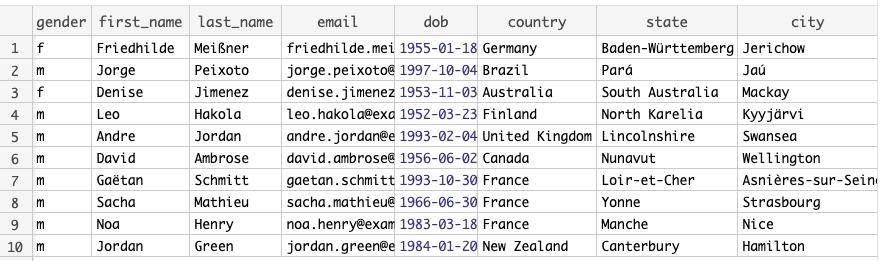

The loaded data

After the flow completes, the data is loaded as expected: